DORA: Digital Object-Recognition Audio-Assistant for the Visually Impaired

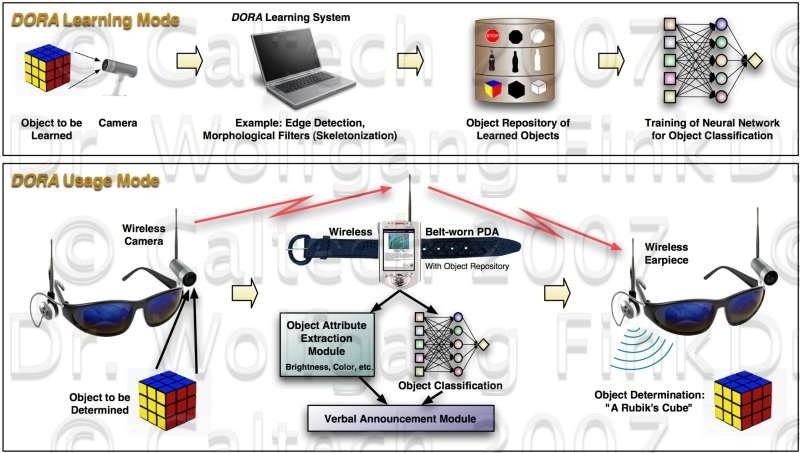

Purpose: To provide a camera-based object detection system for severely visually impaired or blind patients. The system would recognize surrounding objects and their descriptive attributes (brightness, color, etc.), and announce them on demand to the patient via a computer-based voice synthesizer.

Methods: A digital camera mounted on the patient’s eyeglasses or head takes images on demand, e.g., at the push of a button or a voice command. Via near-real-time image processing algorithms, parameters such as the brightness (i.e., bright, medium, dark), color (according to a predefined color palette), and content of the captured image frame are determined. The content of an image frame is determined as follows: First, the captured image frame is processed for edge detection within a central region of the image only, to avoid disturbing effects along the borderline. The resulting edge pattern is subsequently classified by artificial neural networks that have been previously trained on a list of identifiable everyday objects such as dollar bills, credit cards, cups, plates, etc. Following the image processing, a descriptive sentence is constructed consisting of the determined/classified object and its descriptive attributes (brightness, color, etc.): “This is a dark blue cup”. Via a computer-based voice synthesizer, this descriptive sentence is then announced verbally to the severely visually impaired or blind patient.

Results: We have created a Mac OS X version of the above outlined digital object recognition audio-assistant (DORA) using a Firewire camera. DORA currently comprises algorithms for brightness, color, and edge detection. A basic object recognition system, using artificial neural networks and capable of recognizing a set of pre-trained objects, is currently implemented.

Conclusions: Severely visually impaired patients, blind patients, or blind patients with retinal implants alike can benefit from a system such as the object recognition assistant presented here. With the basic infrastructure (image capture, image analysis, and verbal image content announcement) in place, this system can be expanded to include attributes such as object size and distance, or, by means of an IR-sensitive camera, to provide basic “sight” in poor visibility (e.g., foggy weather) or even at night.

Wolfgang Fink, Ph. D.

Senior Researcher at Jet Propulsion Laboratory (JPL)

Visiting Research Associate Professor of Ophthalmology at University of Southern California (USC)

Visiting Research Associate Professor of Neurosurgery at University of Southern California (USC)

Visiting Associate in Physics at California Institute of Technology (Caltech)

Mailing Address:

California Institute of Technology

Visual and Autonomous Exploration Systems Research Laboratory

15 Keith Spalding (corner of E. California Blvd & S. Wilson Ave)

Mail Code 103-33

Pasadena, CA 91125

USA

Phone: 1-626-395-4587

Fax: 1-626-395-4587

E-mail: wfink@autonomy.caltech.edu

Licensing Contact

Office of Technology Transfer at Caltech.